Focus 3: Foundational Research in AI Risk Communication (RC) for Environmental Science (ES) Hazards

“When [weather forecasters] cannot easily understand the workings of a probabilistic product or evaluate its accuracy, this reduces their trust in information and their willingness to use it.”

From Recommendations for Developing Useful and Usable Convection-Allowing Model Ensemble Information for NWS Forecasters (Demuth et al. 2020)

Broad Goals

Leaders: Demuth (NCAR), Bostrom (UW)

Members: Thorncroft (Albany). Ebert-Uphoff, Musgrave, Schumacher (CSU). Hickey (Google). Williams (IBM). Cains, Gagne, Wirz (NCAR). He, Lowe (NCSU). McGovern (OU). King, Tissot, White (TAMUCC). Madlambayan (UW).

1. Increase knowledge and understanding of how transparency, explanation, reproducibility, and representation of uncertainty influence trust in AI for environmental science (ES) for influential user groups.

- Develop trustworthy AI interview and knowledge elicitation protocols to probe the roles of specific types of models and model updates in environmental forecasting decisions, attitudes toward them, and how these are influenced by transparency, explanations, reproducibility, representations of uncertainty, trust in the model(s), model outputs, modelers and other contextual factors for environmental science.

- Develop candidate measures of trust, satisfaction, understanding, and willingness to rely on AI/ML for environmental science.

Progress:

Year 4

- Developed and pre-tested an experimental framework for assessing the extent to which implicit biases might affect AI/ML trustworthiness judgments, using a brief implicit association test (BIAT) adapted from implicit bias research in other domains.

- The study protocol also includes explicit (self-reported) trust assessments, has gone through human subjects review, and is now being pre-registered on OSF.

- Designed, managed, and implemented an approach to illustrate the value of a social

science research method (quantitative content analysis) for enhancing the robustness, reproducibility, and replicability of hand-labeling for supervised ML. This included an empirical analysis of hand-labeling road surface conditions in camera images. Submitted a manuscript that describes the method and the case study results- Wirz et al., under review. Increasing the reproducibility and replicability of supervised AI/ML in the earth systems science by leveraging social science methods.

- In the spirit of open-science and data reuse, we published a set of data collection

instruments and some data (but not the forecaster transcripts due to human subjects

requirements because they cannot be deidentified). - Goals 1, 2, and 3 often co-occur, so we suggest reviewing the progress of Goal 2 and 3 (see below) to have a full picture of our activities and advancements.

Year 3

- Submitted a manuscript (Bostrom et al. 2023) stemming from the risk communication workshop (held at the end of Year 2 in August 2022) pertaining to AI trust and trustworthiness.

- Worked with the workshop participants from computer science, atmospheric science, risk

and science communication, environmental science, psychology, sociology, and cognitive

and industrial systems engineering to co-author a paper that synthesizes the workshop

discussions and identifies four foci for future research:- user-oriented development and co-development

- understanding and measuring trust and trustworthiness

- goal alignment, calibration, and standard setting

- integrating risk and uncertainty communication research with research on trust in AI.

- Received R&R on manuscript, which is now being revised for resubmission by August 2023.

- Goals 1, 2, and 3 often co-occur, so we suggest reviewing the progress of Goal 2 and 3 (see below) to get a full picture of our activities and advancements.

Years 1 and 2

- Two GRAs conducted a systematic review of the AI/ML literature re: trust, trustworthiness, interpretability, explainability

- Developed an initial draft summaries of empirical findings on trust and trustworthiness in AI, interpretability and explainability of AI

- Began interdisciplinary reading group meetings

- Initial discussions and draft of a glossary and taxonomy

- Shared initial literature review findings in site-wide meetings, TAI4ES summer school.

- A RC tutorial is being developing for Spring 2022

- Planning has begun for a RC workshop for Summer 2022

2. Develop models to estimate how attitudes and perception of AI trustworthiness influence risk perception and use of AI for ES

- Enhance existing and develop new theoretical frameworks for experts’ assessment and uses of trustworthy AI/ML information

- Model and test influence of existing and newly developed XAI and interpretable AI approaches and AI/ML-interactions for environmental science on trust and use of AI/ML for environmental science.

- Predict influence of new XAI approaches and novel AI/ML interactions on trust and use of AI/ML for environmental science.

Progress:

Forecaster Interviews about Severe Convective XAI

Year 4

- Completed all qualitative and quantitative analyses of interviews and pre-interview surveys conducted with NWS forecasters.

- Synthesized the results about the model attributes that forecasters deem as essential and useful for familiarizing themself with new guidance and using it operationally for forecasting.

- Also synthesized multiple, complex themes, including how forecasters want to understand new model guidance, interact with guidance to explore and evaluate it, use their conceptual models of atmospheric processes to make sense of new guidance, and evaluate different AI/ML features to assess the trustworthiness of new guidance. Submitted the paper, revised it, and had it accepted.

Year 3

- Completed reflexive thematic analysis of interviews with forecasters – which incorporated AI/ML predictions of severe hail and severe storm mode.

- Synthesized themes relating to forecasters’ (a) guidance use decisions (b) perceptions of and attitudes towards new-to-them AI/ML-derived guidance and its trustworthiness and their willingness to use the guidance for their professional decision-making.

- Completed associated quantitative content analysis to identify mentions of trust and trustworthiness in forecasters’ guidance use decisions.

- Drafted manuscript of results and got feedback from interdisciplinary authorship team. Planning to submit by August 2023.

Year 2

- Incorporated cognitive think-aloud and interactive methods into the interview instrument, to improve type and quality of the interview data collected

- Conducted 18 interviews with forecasters from NWS and IBM

- For the 3D-CNN storm mode guidance, conducted initial inductive analysis of some parts of the interview data, to provide feedback to developers for guidance refinement for testing in the Hazardous Weather Testbed

Year 1

- Co-developed semi-structured interview protocol; in doing so, identified the need to determine definitions of explainability, interpretability, trustworthiness, etc.

- Developed, tested, and revised a coding scheme for deductively analyzing interview data and to foster collaborative analysis with AI2ES team members

- Conducted pre-tests of interview protocol, identified gaps, revised accordingly, and finalized the interview protocol

- Developed a sampling frame for interviews and initiated sampling for formal interviews

- Conducting formal interviews is and cleaning the interview data

Trustworthiness of ML guidance for Severe Convection

-

- Co-developed the survey

- Integrated key features of XAI into development of survey items, e.g., performance, interactivity, failure modes

- Collected n=92 completed survey responses (70% response rate) from testbed participants (n=36 forecasters, n=38 researchers, and others) in May-June 2021

- Analyzed survey results

- Presented at NOAA 3rd AI workshop in September 2021

Interviews about Winter and Coastal/Ocean with Users

Year 4

- Winter Use-Case:

- Precipitation-type (ptype): This work included participating in efforts to quality control the model development (such as the citizen-science based observational datasets), to run the ptype model in real-time during Winter 2023-2024 to develop an archive of cases, to discuss physical and other explainable AI attributes of the model, to evaluate the model verification in different ways, and to develop ways of interacting with the model output.

- Refined the goals and research approach for an interactive, interview-based data collection with weather forecasters with a focus on providing the baseline

information needed for them to orient to the new AI/ML model. - Investigated how they interact with the guidance at point, over space, and over time and how model transparency with performance shapes their perceived trustworthiness.

- For the Winter Images group: Guided the development of their codebooks and procedures, as well as helped actually label data for their models.

- Coastal Use-Case:

- Developed a codebook for systematically evaluating the responses and reactions of forecasters to the AI/ML product using quantitative content analysis. For this we developed, tested, and refined a codebook procedure that identified the types of evaluations forecasters made. Then three members of the RC team applied this analytic approach to systematically evaluate the coastal interviews.

- We have also analyzed the data from these interviews to create a framework for understanding how expert decision makers evaluate and make use decisions about new AI/ML decision support systems. This framework and the quantitative analyses are being developed into a journal publication.

- Trustworthy AI for ocean eddy prediction with users:

- Met approximately every two weeks with the AI-oceans team to iteratively

co-develop an interview protocol, including think-aloud, for the NCSU OceanNet loop current eddies prediction model for the Gulf of Mexico. - The interview protocol examines trust in and trustworthiness of an ensemble approach to loop current eddy predictions with lead times of 30-day and 120-days.

- Decided to focus on oil and gas metocean specialists as the intended expert users based on previously established relationship with a point of contact within the Gulf of Mexico metocean specialists community.

- Developed recruitment strategy based on limited sample size of metocean

specialists operating within the Gulf of Mexico and legal considerations between research institutions (NSF NCAR, UW) and private oil and gas companies. - On schedule for data collection in summer 2024.

- Met approximately every two weeks with the AI-oceans team to iteratively

Year 3

- Winter Use-Case:

- Met approximately every two weeks with AI-ptype group to co-develop the research goals and the corresponding (a) ML approaches for ptype nowcasting and predictions and (b) RC research questions for research with forecasters and transportation officials.

- Focused on interacting with p-type predictions to understand the model inputs and outputs and investigate model sensitivity, and to assess performance.

- Began designing mixed-methods approach with qualitative observations or interviews followed by quantitative experiments to

- (a) understand users’ forecast processes, informational needs, and decision-making related to p-type and

- (b) elicit their interpretations, perceived trustworthiness, and potential use of ML tool including key features of it (e.g., representations of uncertainty, interactivity)

- Trustworthy (X)AI for FogNet with user interviews:

- Co-developed an interview protocol, including think-aloud, for coastal fog with AI and

atmospheric scientists to (a) study trust in and trustworthiness of (X)AI for FogNet (coastal fog prediction) and (b) assess the generalizability of findings from our severe convection research. - Interview protocol includes new exploration of forecasters’ assessments and uses of XAI techniques and detailed modeled performance (verification).

- Developed sampling strategy based on coastal fog climatology, and correspondingly

recruited NWS forecasters via regional headquarters and then local forecast offices. - Thus far have interviewed 10 NWS forecasters with plans for finalizing data collection after a few more interviews in Summer 2023.

- Co-developed an interview protocol, including think-aloud, for coastal fog with AI and

- Trustworthy AI for ocean eddy prediction with users:

- Met approximately monthly with the AI-oceans team to co-develop research goals and approaches based on their development of ML to predict loop current eddies at different lead-times.

- Held informational interviews with (a) oil spill risk response expert to better understand ocean eddy prediction model end users, their decision spaces, general data needs, and decision timelines and (b) science director from fish conservation non-profit organization.

- Met with oil and gas Metocean specialist to further explore data collection processes and steps with private industry partners, and to refine research design (sampling, approach, timeline) for metocean specialist user group.

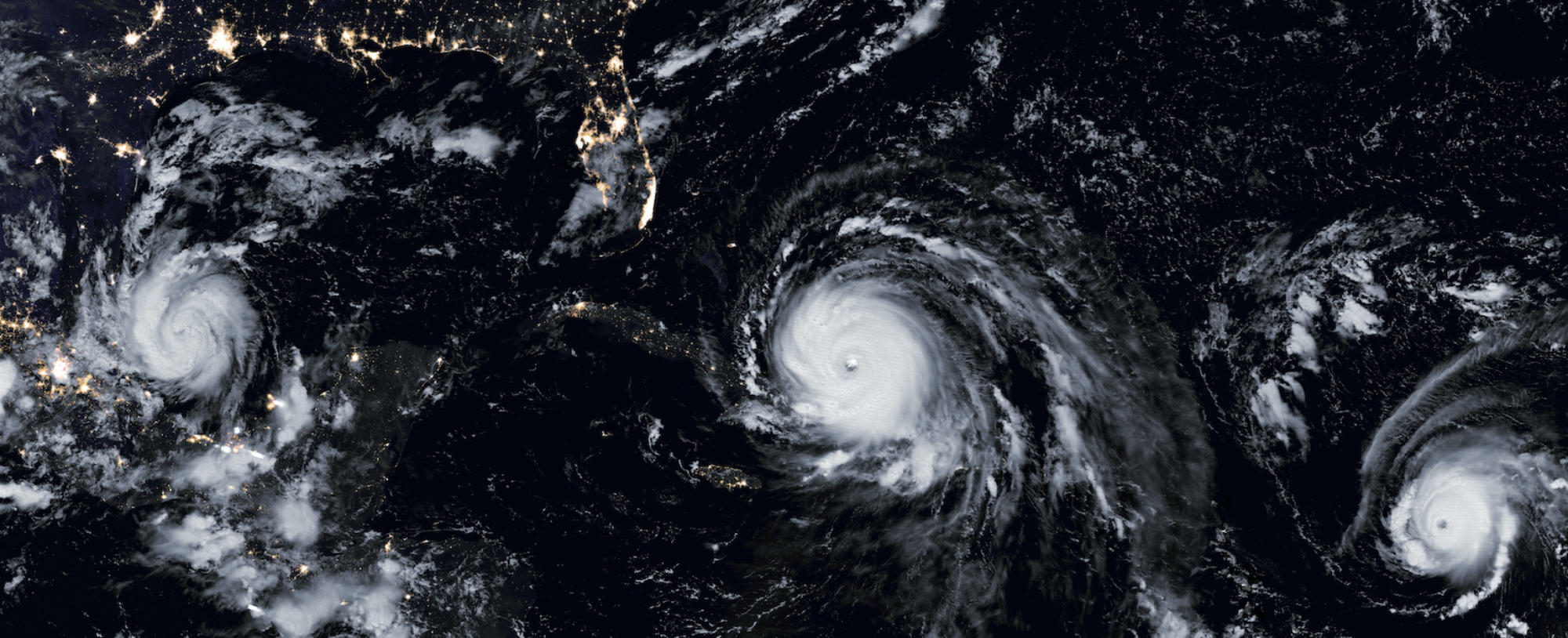

- Tropical Cyclones Use-Case

- Conducted a few additional collaborative research meetings for the AI/TC and RC teams to learn about each other’s respective research ideas and approaches and to co-ideate and develop research ideas to evaluate simulated microwave imagery and associated uncertainty with NHC forecasters.

- Discussed simulated microwave imagery as a possible collaborative research opportunity. The ways in which microwave imagery are currently used by NHC forecasters are varied and complex, and the new simulated microwave imagery provides even more complexity in the introduction of two-dimensional uncertainty estimates. The interdisciplinary team recognizes this, and is taking care in considering this as a collaborative possibility.

Year 2

- Guiding Supervised ML for Precipitation from Cameras (New York Mesonet):

- Iteratively developed, tested, and refined the formal coding scheme for the images

- Achieved intercoder reliability with the coding scheme (Krippendorff’s alpha > 0.8)

- Began hand labeling images to train the model

- Trustworthy (X)AI for FogNet with user interviews:

- Co-developed a first draft of the think-aloud interview guide for pre-testing interviews.

- Developed a preliminary output format for the FogNet model

- Integrated verification metrics focused on skill and rare events to better understand the importance of verification metrics for forecasters.

- Integrated XAI results into the interview guide, which will provide the first empirical evaluation of end users’ perceptions of these techniques.

- Trustworthy AI for ocean eddy prediction with users:

- Established interdisciplinary research team across NCAR, UW, and NCSU.

- Leveraging existing research partnerships to connect with relevant end user groups.

- Oceans/RC team held informational interview with met-ocean specialist from oil company to better understand end user decision space, general data needs, and decision timeline.

Year 1

- Using the foundation from the severe convective use case (which considerable time was spent developing) to apply and modify accordingly for winter and coastal

- Winter

- Began the risk comm-AI-winter working group and established regular meetings

- Met with DOT partners to learn about user needs

- Currently co-developing a DOT interview protocol and developing a prototype AI/ML to use in the interviews

- Coastal

- Began the risk comm-AI-coastal and ocean working groups and established regular meetings

- Initiated two new protocols based on the current coastal team’s AI/ML efforts

3. Develop principled methods of using this knowledge and modeling to inform development of trustworthy AI approaches and content, and the provision of AI-based information to user groups for improved environmental decision making.

- Development of research methodologies to develop and evaluate AI/ML environmental science information in users’ real-world decision-making environments, including unobtrusive and low-response-burden evaluative approaches for use in operational contexts.

- Develop trustworthy AI/ML information that is deemed useful and is used by different decision-makers across environmental science domains

Progress:

Year 4

- This past year, we wrote a perspective piece that synthesizes exemplary outcomes from our Institute’s convergence research efforts, coupled with a discussion of the ways we implement convergence practices, and our plans for future research. Much (but not all) of this synthesis emphasizes the importance of use-inspired research.

- McGovern, A., J. L. Demuth, A. Bostrom, C. D. Wirz, P. E. Tissot, M. G. Cains, K. Musgrave: The value of convergence research for developing trustworthy AI for weather and climate hazards, npj (Nature Partner Journals) – Natural Hazards. (in press)

- Completed specification and pretesting of the protocol for a systematic review of trust in

embedded AI, and pre-registered the protocol. Began screening abstracts and refining our approach to data extraction and quality assessment.- Campbell et al., 2024. Determinants of study participants’ trust in embedded artificial intelligence: a systematic review protocol.

- Revised and had accepted the manuscript (Bostrom et al. 2023) that stemmed from the

risk communication workshop, which summarizes the state of research on trustworthy AI

and sets a research agenda for AI/ML in environmental sciences, with a highly

disciplinarily diverse co-authorship.- Bostrom et al., 2023. Trust and trustworthy artificial intelligence: A research agenda for AI in the environmental sciences.

- Revised and resubmitted a manuscript that provides a theoretically rooted, foundational

framework for (re)conceptualizing trustworthy AI as perceptual and context-dependent,

to augment other ways of conceptualizing this complex construct.- Wirz et al., under review. (Re)conceptualizing trustworthy AI: A foundation for change. Artificial Intelligence.

- Analyzed data from 29 interviews with National Weather Service forecasters to

understand how these expert users perceive AI/ML for operational forecasting.

Synthesized their preferences, familiarity and openness, and perspectives about the

positives and negatives of AI/ML and submitted a manuscript with these foundational

results.- Wirz et al., under review. Forecasters’ perceptions of AI/ML and its use

in operational forecasting.

- Wirz et al., under review. Forecasters’ perceptions of AI/ML and its use

Year 3

- Developed and applied a codebook for deductive, quantitative content analysis of the concepts of “trust” and “trustworthiness”

- Finalized development of the codebook after achieving good intercoder reliability among three coders with a Krippendorff’s alpha of 0.76 for trust and 0.74 for trustworthiness. Published finalized codebook as open access via Zenodo for use by other scientists. (Wirz et al. 2023, https://doi.org/10.5281/zenodo.7113671)

- Successfully applied the coding scheme to the severe forecaster interview data, and identified mentions of trust and trustworthiness in forecasters’ responses to questions about their guidance use decisions.

- Submitted a manuscript to Artificial Intelligence Review that advances fundamental thinking by (re)conceptualizing trustworthy AI as perceptual and context-dependent.

- Reviewed literature on trust and trustworthiness from the fields of interpersonal trust, trust in automation, and risk analysis.

- Developed, refined, and synthesized the reconceptualization iteratively with the interdisciplinary team of coauthors, including AI co-authors for whom these ideas are newer and who represent the target journal audience.

- Also presented the reconceptualization at the Society for Risk Analysis, Cooperative Institute for Research in the Atmosphere, and National Center for Atmospheric Research.

- Designed approach for integrating social science methods (quantitative content analysis) into the hand labeling of training sets for supervised ML to enhance model reproducibility.

- Extended prior year’s efforts from hand-labeling mesonet images for precipitation to

hand-labeling New York State Department of Transportation road surface conditions. - Drafting methods paper with plans to submit in Summer 2023.

- Extended prior year’s efforts from hand-labeling mesonet images for precipitation to