Glossary Term

Explainability

The degree to which a human can derive meaning from the entire model and its components through the use of post-hoc methods (e.g., verification, visualizations of important predictors).

Related terms: Interpretability

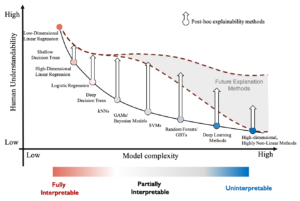

A distinction between interpretability and explainability is needed since some in the ML and statistics community favor producing interpretable models (i.e., restricting model complexity beforehand to impose interpretability; (Rudin et al. 2019, Rudin et al. 2021) while the general trend in the ML community is to continue developing partially interpretable and black box models and implementing post-hoc methods to explain them. A deep neural network (DNN) or dense random forest is uninterpretable, but through external explanation methods, can be approximately understood. Explanation methods must be approximate as they would otherwise be as incomprehensible as the black box model itself. This is not a limitation of explanation methods as suggested by other studies (e.g., Rudin et al. 2019, Rudin et al. 2021) since abstracting a complex model is required for human understanding. For example, it is common in the weather community to replace the full Navier-Stokes equation with conceptual models that are more understandable. The degree of explainability, though, is controlled by the model complexity (Molnar et al. 2020). As the number of features increases or their interactions become more complex, the explanations for the ML model behavior become less compact and possibly less accurate. At the moment, it is unclear how much improvement in understanding of high-dimensional, highly non-linear models current and future explanation methods will offer (Fig. 1).

Fig. 1 Illustration of the relationship between understandability and model complexity. Fully interpretable models have high understandability (with little to gain from explainability) while partially interpretable or simpler black box models have the most to gain from explainability methods. With increased dimensionality and non-linearity, explainability methods can improve understanding, but there is considerable uncertainty about the ability of future explanation methods to improve the understandability of high-dimensional, highly non-linear methods.

Resources and References

- Interpretable Machine Learning: A Guide for Making Black Box Models Explainable

- Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead, Rudin et al., 2019

- Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges, Rudin et al., 2021

- Quantifying Model Complexity via Functional Decomposition for Better Post-Hoc Interpretability, Molnar et al. 2020