Glossary Term

Computational/Model Bias

The various forms of mathematical bias that arise due to the choice of model and parameters of interest for a given dataset. These biases include but are not limited to: the bias-variance tradeoff, frequency bias, and/or inductive bias.

Related terms: Data Bias, Decision-Making Bias, Bias-Variance Tradeoff, Frequency Bias, Inductive Bias

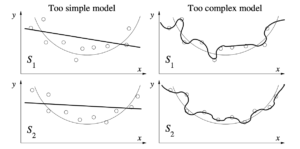

When developing a model for some dataset, we may initially choose a simple model (e.g., a linear model) to describe the data (upper left hand panel). Simple models, though, fail to capture regularities in the data and tend to underfit the data (high bias, low variance). To capture regularities in a dataset often requires a low-bias prediction. However, lowering the prediction bias comes at the cost of increasing the variance of the model prediction. Low bias and high variance as shown on the right overfits the data, as it pays way too much attention to training data and doesn’t generalize well. In other words, increasing the prediction variance tends to increase overfitting to the training data. The bias variance-tradeoff differs from one learning algorithm to another.